This post refers to an early version of elastalert-ci, and technical implementation details mentioned below may not apply. Please read the README on the project repository for accurate information on how to use elastalert-ci within your project.

tldr

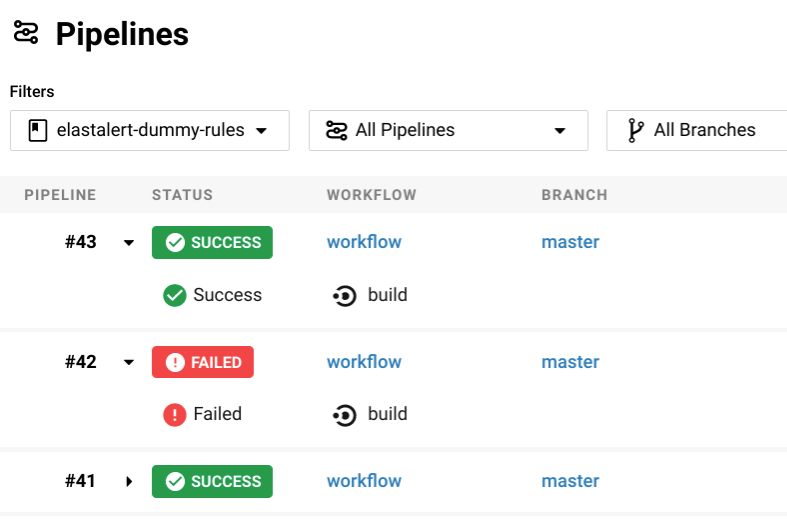

I have created a Docker image that can be used to continously test Elastalert rules against Elasticsearch data, to verify that new rules and edits to existing rules work as expected:

If you want to head straight to the code, the CI image is defined here, and you can also look at how a CircleCI config that uses the image should be structured here.

Motivation

I’ve been using Elastalert quite extensively for a few months now, and one of the processes that isn’t as neat as it could be is testing. The current workflow I use is to write a test that looks sensible, and then either test it locally or simply push the test and do the actions that should trigger an alert on the running instance. The first method is fairly time-consuming and not easy to replicate in an identical manner; the second is time-consuming, annoying for other users of the system, and somewhat risky. Neither option is particularly ‘DevSecOps’ either.

In addition, I’ve been meaning to play with CircleCI for some time, because it’s a word that I’ve been hearing frequently and I wanted to see what it was like.

When Marco mentioned that Elastalert provided an elastalert-test-rule function,

I thought that this would be a simple project - simply wrap that test script

into a Docker container and use that in a CircleCI image. The reality, as it always

does, turned out to be significantly more involved, but I got there in the end.

Development

The aim

elastalert-test-rule was described in a way that made it seem ideal to use

as-is in a Dockerfile. It claims to provide a --data argument that allows a user to

submit a file of Elasticsearch data that will be matched against locally (without

spinning up an Elasticsearch instance), and a --formatted-output argument that

“[outputs] results in formatted JSON”.

My initial idea was to use these options to create a single-container CI image that, when provided with a rule, would use the testing system’s mocking functionality to run matching rules. I would then capture the ‘formatted JSON’ output, and return whether a rule matched or not.

Problem 1: the script doesn’t work

The first problem I came up against (and a worrying one at that) was that

the elastalert-test-rule script doesn’t work. A few months ago, Elastalert made

the switch from Python 2 to Python 3, and it looks as some functionality had

been forgotten in the process. I put in a PR for the issue I was having,

but it was clear that I was the only person to have used this script with the

--data flag for a long while. While I waited for the PR to be accepted, I

used sed in the Dockerfile to carry out my change on-the-fly at build time.

Problem 2: --data doesn’t work as described

In the Elastalert documentation, the --data flag is described as allowing you

to Use a JSON file as a data source _instead_ of Elasticsearch (emphasis mine).

This was the main attraction for me, as it would reduce the overhead of running

a test. When actually running the script, however, it would error if it wasn’t

able to contact Elasticsearch, as the script was trying to create an Elasticsearch

object regardless of whether the --data flag was provided.

In addition, I discovered through testing that using the --data flag doesn’t

apply any of the filters that your rule defines. For example, if you supply

elastalert-test-rule with a dataset that has 1000 records, and the filter

in your rule you are testing would result in your rule not matching on any of the records, using

elastalert-test-rule --data would result in 1000 hits regardless. In hindsight, this

is somewhat obvious - Elasticsearch handles the querying and it would take

significant work for Elastalert to mock this functionality. Either way, the

limitation isn’t obvious from the documentation, and severely reduces the value

of the --data option. I would need to find a way of running an Elasticsearch

container in addition to the Elastalert container, and making the two of them

talk to each other.

This was probably the most time-consuming challenge. For local testing, I switched

to using docker-compose, which allows you to manage multiple containers using

a couple of commands, allowing those containers to talk to each other on their

own network. CircleCI also allows you to do similar things, which made it

surprisingly easy to spin up an Elasticsearch container that would sit in the

background waiting for Elastalert to run against it on either platform. I wrote

a wrapper script to avoid using --data, instead uploading the necessary data

directly to Elasticsearch.

My final issue on this front was that Elasticsearch takes quite a while to start

up, especially when running on low-spec containers. Elastalert would start up

much faster, and would then exit on not being able to talk to Elasticsearch.

I discovered dockerize, which can be used as a wrapper around your Docker

CMD with the -wait option. This basically polls a HTTP endpoint every second

for a predetermined amount of time; if/when the endpoint returns a 200 status

code, dockerize hands over to your Docker CMD. This stopped my Elastalert

container from panicking immediately at runtime, and hasn’t failed to work

yet, even though it’s quite a crude solution.

Problem 3: --formatted-output is not entirely formatted

By the time I got to this point I had what I thought were the most complicated

parts of the puzzle sorted: I had a pair of containers that could run in either

docker-compose or CircleCI, uploading test data to the Elasticsearch instance

and then running Elastalert to it. I then began wrapping the Elastalert output:

Elastalert would return a 0 exit code if it managed to run successfully regardless

of whether rules matched or not, and I needed more granular insight into the rule

output.

I discovered here that --formatted-output does indeed print the output as JSON,

but there is no easy way to direct this output to a location of your choosing.

I initially worked around this by modifying the elastalert-test-rule script

directly, changing it to write to a file, but I then thought that it might be

neater for me to simply capture the output of STDOUT in my wrapper script and

work with that. I then discovered that elastalert-test-rule with the

--formatted-output option prints two lines of unformatted plaintext output

before outputting the formatted JSON. At this point I was too tired to switch

back to directly editing the testing script, so I simply used a Python regex to

remove the first two lines of the output file before parsing the JSON. I used

to be a Perl developer in a previous life.

The home stretch

These were all the key problems fixed: I finally had a system by which I could run a rule against some data and check whether it fired on not, returning an appropriate exit code depending on the result. In a final burst of motivation, I also solved the following minor issues:

- Timestamps: Elastalert normally runs over the last day of data, but I found a

poorly documented

elastalert-test-rulefeature that allows you to provide--startand--endtimes, so that you don’t have to keep changing the timestamps on your data files. I added the concept of a ‘data index’ that should live in the repository with the rules and the data, which defines metadata about the dataset, and allows multiple rules to easily point to the same dataset. - Recursion: I used CircleCI’s globbing functionality to find all the rules within a folder, even if they are nested, which I expect is a common use case. Rules that don’t have CI metadata defined are skipped by the wrapper script.

- Parallelism: I used CircleCI’s parallelism and

tests splitfunctionality to add parallelism to the testing, which I expect will help significantly with large rulesets.

The CI image and an example ruleset are both on Github for those interested.

Future work

The current script is quite brittle - in the excitement of making something I haven’t really been focusing too hard on edge cases. If there is interest, or if I actually end up using this for practical purposes, I would want to tidy the code up significantly so that it is more maintainable.

The wrapper function is also quite naive - it can tell you that an alert has fired, but can’t tell you the number of matches to the rule, or whether some metadata you wanted in the alert text has been correctly picked up. I do hope to add this functionality soon, as it shouldn’t be too difficult.

It should also be possible to state that a particular dataset shouldn’t cause a rule to fire, and test for that. That functionality doesn’t exist yet.

It should also be possible to easily run a single rule against multiple datasets, testing its behaviour against each one. Again, this isn’t possible yet.

Ideally, however, this is a starting point to creating reliable, repeatable CI builds for Elastalert rules. For security, which is the field that I work in, being able to assure ourselves that our rules work as we expect them to is a big piece of knowing that we are functional as a team. I have seen other mentions of CI pipelines for security detection rules, but I haven’t seen any open source work in the area, so hopefully this is a useful contribution as a starting point.